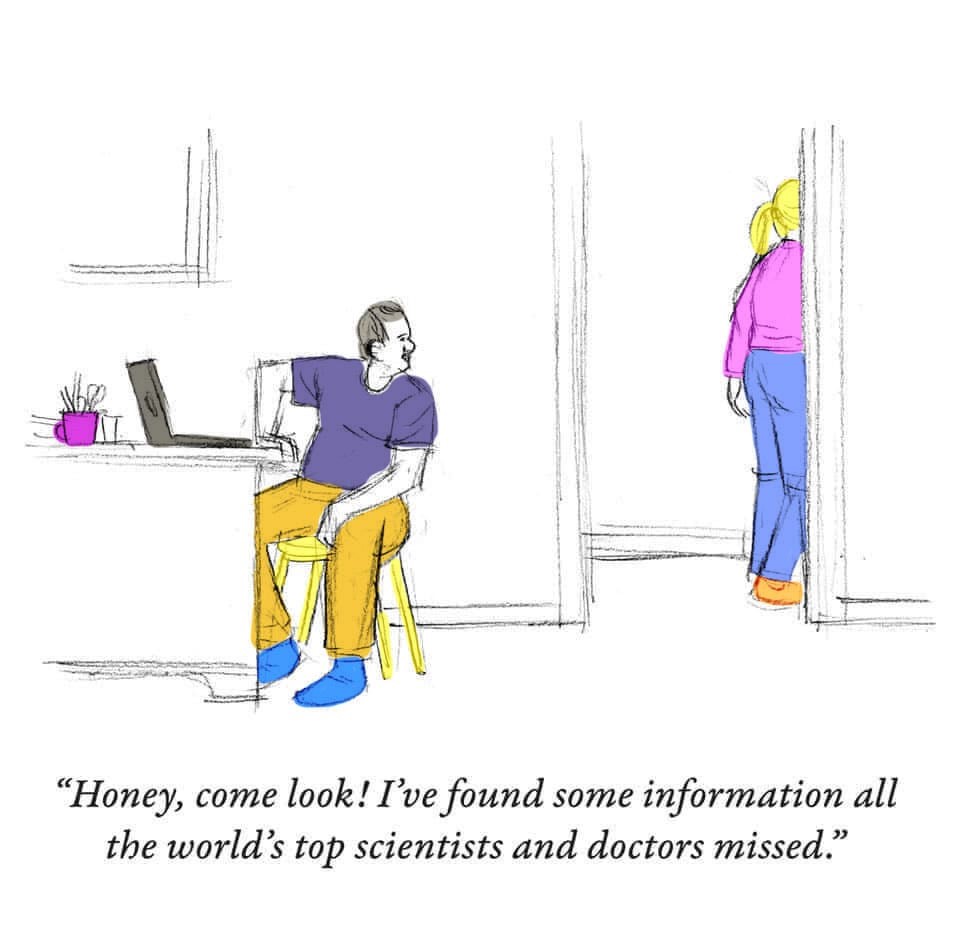

“I do my research!” If I had a dollar for every time I’ve read that statement on social media these past 21 months or seen it displayed on a poster being held by an angry protester, I could…quit doing research, at least for my living.

What does it mean to do your research? To quote a popular movie about another term, “I don’t think it quite means what you think it does.”

My dad, who passed away in 2008 a few months shy of his 93rd birthday, would call or write to me at least once every few days to ask me a question about nutrition—specifically what he should eat or not eat. And if I gave him an answer he didn’t like, he would proceed to argue with me and tell me I was wrong and send me his research base, nearly always a newspaper clipping or an article torn from a popular “natural” health magazine at the time. If I showed him my references and explained the process I went through to assess their credibility, he would just scoff. Sometimes, like when he insisted ice cream was a good breakfast (but only if it was on sale), I wished he was right, sale or no sale. But I’m a nutritional biochemist, trained to analyze medical research.

Don’t get me wrong: What my father suffered from (besides a craving for the foods his mother and my mother rarely let him have) is part of human nature and something we all fall prey to at least some of the time. It’s called confirmation bias, or more popularly, believing what we want to believe, or wishful thinking, and “cherry-picking” the research that supports our beliefs.

That’s the trouble with doing our own research. We—most of us, anyway—have this drive to find the evidence that supports our world view. Even some extremely well-educated scientists and health care providers, who ought to know better, do this. One even has his own TV show. Usually experts do this to make a lot of money (by touting the benefits of some unproven supplement or remedy) or to increase their prominence or to avoid having to admit they’ve made a huge error. And we might believe them—because they’re experts, because real answers are hard to come by and often unsatisfying, and because…confirmation bias.

How Do You Not Be That Person?

Is it actually possible not to succumb to confirmation bias? It’s not simply a matter of becoming skeptical. After all, the folks who are avoiding getting vaccinated against COVID because they don’t believe the results of the published scientific studies, don’t trust the government, don’t even believe COVID is a real thing—they might be the ultimate skeptics.

In the renaissance era, the 1600s, it was perfectly possible for a single person, say, Leonardo da Vinci, to be an expert in all areas of science. Not that much was known or understood about how things worked. Today, scientists can barely keep up with scientific progress in their own narrow areas of expertise. So if some new research finding in a slightly adjacent field sounds plausible, many of us just assume it’s based on sound evidence. But that’s risky, even for scientists. So how do we navigate the morass of what we constantly hear and read in the media? How do we evaluate what’s plausible in a field that is not what we’re directly studying? Here are some important truths and then some tips on evaluating what you hear and read.

Scientific truth is not truth in the sense of being immutable—impossible to disprove. All scientific truths are constantly being subjected to further research. You might have heard that science is an iterative process: That’s what this means. Nowhere has this been clearer than in the fast-evolving area of COVID-19 knowledge and research.

Take the advice early on from trusted experts that the average person didn’t need to wear a mask but that we needed to disinfect our mail and groceries. These guidelines were based on evidence gathered from other flu-like viruses, very preliminary evidence from COVID-19, and, to be honest, the fear that if everyone ran out to buy masks, health care personnel would face shortages, which they did. Soon thereafter, scientific research strongly supported the transmission of the virus through droplets from the noses and mouths of infected people, not nearly so much from touching objects, and therefore the effectiveness of masks. Frustrating to scientists, COVID deniers have latched on to this change in policy recommendation as a way to discredit science. When it is the strongest demonstration of how science works.

Scientific evidence—the results of scientific research—is not influenced by politics or economics or religion. Scientific evidence is just based on trusted, rigorous, high-quality, reproducible scientific research. Policies (guidelines and laws) that governments or businesses make should be based partly on scientific evidence but are often, for better or for worse, based more on economics, expediency, personal belief (remember confirmation bias?), religion, or you name it. That’s not to say that all policy must be based on nothing but scientific evidence. There are at least two reasons for this. First, realities like economics have to be considered when telling people what to do or not do or what the government is willing to pay to let them do. Second, we often lack the scientific evidence to make definitive policy decisions. For example, we lack evidence on whether some drugs work as well for women as they do for men; for that reason, the government hesitates to recommend some medications for certain groups when evidence is lacking).

The other and most important thing about evidence is that it varies greatly in strength. And weak evidence is not something you want to hang your hat—or your life—on. What determines the strength of evidence? How do scientists know what to believe? And can you apply a lay person’s version of that to what you hear and read? That’s what the rest of this blog is going to be about.

What Determines the “Strength of Evidence”?

Measuring the strength of a body of evidence is meant to do just that—to examine the strength of a whole body of evidence: ALL the studies that tried to answer a question, considered together, often quantitatively using meta-analysis (a statistical method that analyzes the combined results of multiple, independent studies that sought to answer the same question, producing a single result). Often, though, in fact most often, the media present the findings of just one study, completely out of context, as if no one ever studied this question before, or because we’re supposed to assume that if it’s the latest, it must be the greatest…the last word, so to speak.

The strength of a whole body of evidence is judged based on about 6 or 7 really important things, but you can actually apply these criteria to the news you hear about individual studies. Still, I will try to distinguish here between rules of thumb for groups of studies and single studies.

- Does this evidence apply to me or the people for whom it was intended? Applicability is a term that refers to whether the “subjects” or study participants were like the people we are interested in (like, if we need to know if a drug works in women, did they test it on women? Men? Worse, mice?). As a scientist, I have to consider applicability when weighing evidence, but you, too, should listen for it in any news report. Often, the splashiest studies were done on mice, meaning the results are essentially meaningless for us humans.

- Are the number and size of the studies big enough? When you hear or read a new research finding, are they reporting on 1 research study or a rigorous review of a lot of studies? I’d say 9999 times out of 10000, it’s 1 study. No one can draw any conclusions from 1 single study. If they are reporting on a big review of all the relevant studies, it means scientists are weighing the evidence from all those studies—that’s what a systematic review is—but you will seldom hear the results of a systematic review being trumpeted in the media, because systematic reviews tend to provide modest, not splashy, results (that’s because when all the results are pooled [combined] the splashiness usually gets pretty diluted).

As for the size of the study—the number of people enrolled in it—many human studies are much too small to provide trustworthy results, results that are likely to be repeatable. Human trials are costly and difficult to conduct—much effort must be expended to recruit and retain humans in trials, and they often drop out if the going gets tough. There are fancy mathematical ways to determine how many people need to be in a study to be able to trust the findings, but my rule of thumb is that if there are fewer than 100 (50 people who get the treatment and 50 who get the sugar pill), it’s a small study that should be taken with many grains of salt. So if a study is very large, especially compared to other studies like it, does that make it a good study? Unfortunately, not necessarily. A study can have managed to enroll many participants (imagine a study in which participants were promised they could eat ice cream everyday) but still be a poor quality study (see point 3, below), and this is quite vexing. Why? When the rest of us scientists see a large study, I think we tend to pay more attention to it, warranted or not. We want to believe it, especially if the results are especially (statistically) significant or interesting or they confirm our own confirmation biases! Worse, if we are looking at a whole group of studies that tried to answer the same question, we might discount the smaller studies. And worse still, if we are doing a meta-analysis, the results of the large study can disproportionally affect the pooled result (that is, statistically pooh-poohing the smaller studies). That’s why when we conduct meta-analyses, we do what is called a sensitivity analysis: We determine whether and how much the large study may have skewed the result by doing a second meta-analysis with the large study excluded.

- Is the quality of the study good or poor? So since most of the time, what you’re hearing about in the news is one study, is it even a good quality study? What does that mean? For studies to produce credible, unbiased results, they need to be conducted rigorously with attention to certain details.

First and foremost, is the study an actual trial, or did researchers simply observe a particular health phenomenon in a bunch of people and try to associate it with something they consumed (or didn’t), or something else they were exposed to. Only trials can establish cause and effect. If you’ve read my writings before, you know that observational studies are considered poor quality. Only extraordinarily careful design can make them less bad. Most of the health news you hear—especially about food or individual nutrients—is based on observational studies, making it suspicious from the start.

Not all trials are necessarily high quality, though. Among the criteria for a high quality trial, the participants need to be randomly assigned to the treatments (not assigned such that the people you think will have the best results get the experimental treatment).

They also need to be assigned in such a way that neither they nor the researchers know who is getting the real treatment until the very end of the experiment (this is called blinding; when people know they’re getting the real treatment, they magically tend to do better).

The number of dropouts and their reasons for dropping out needs to be carefully tracked, because a large number of dropouts could mean that the experimental treatment is unduly burdensome or has unpleasant side effects. Also, and this may seem counterintuitive, the dropouts need to be counted as if they completed the study, because we know that in real life, some proportion of patients stop taking their medication or never even gets the prescription filled—so it’s important to try to build this noncompliance into the testing by treating the dropouts as completers who had no benefit from the treatment.

What else? Valid tests need to be used to measure the outcomes, and valid statistical tests need to be performed to ensure the results are significant. The intervention (whether it’s a medication, a surgical procedure, an exercise regimen, or some other treatment) needs to be carried out long enough to reasonably allow the expected results; for example, it would not be reasonable to expect people taking a diet drug or starting an exercise program to lose a measurable amount of weight in 3 days.

And participants should be tracked to ensure they’re really doing their treatment (taking the pills, sticking to the diet, doing the exercise) and that they’re not doing something else that could inadvertently negate the effects of the treatment or make it seem to be working better than it is. For example, if I assign a group of people to take fish oil to see if it helps their depression, I would want to know if they decided to start—or stop—taking antidepressants.

This is a lot, and any of these things, if not done correctly, could bias the results in favor of what the researcher hopes to find. These are the kinds of details that are evaluated when a study that involves a trial is submitted to a scientific journal and undergoes “peer review.”

But when was the last time you heard a news reporter mention any of these details? You don’t. And that is a problem. In fact, many of the studies we hear about in the news or on social media—for example the reports that hydroxychloroquine and ivermectin treat COVID-19 effectively—have not gone through peer review (or have failed peer review and been rejected for publication in a legitimate scientific journal), meaning that the results of the study could be completely invalid and meaningless. But you would never know that unless you decided to become a super sleuth to try to find out where the study was published (which can be extremely challenging).

- When we examine the strength of a whole body of evidence, that is, all of the studies that have been conducted to try to answer a question, we compare all the findings to see if they agree, and if they don’t, we try to see why. If a new study diverges from prior studies, is it because it was more—or less—carefully done? Was it just that the participants were older or younger or sicker or from a different gender or cultural or racial group? Studies need to be evaluated in light of the research that preceded them. But when you hear about a study on the news, the reporters never mention other studies. Every study is portrayed as if it’s the first ever to try to answer a question, or worse, as if it’s the definitive study, the one that will answer the question for all time. This is never the case. Remember: science is iterative!

- How significant are the results, really? I can’t tell you how often I hear a study reported in the news as having earth-shattering results, only to find that the effect of the treatment was barely greater than the effect of the sugar pill, or maybe not greater at all, because the findings varied so much from person to person. Or maybe the effect of the treatment was statistically significant compared to the sugar pill but clinically meaningless: for example a headache medication that makes the average person’s headache last 10 seconds less than the sugar pill did.

- Does the result of the study really have anything to do with an important health outcome? We often hear things like, “This study showed that eating 5 almonds a day prevents heart disease,” when what the study found was that the people who ate the almonds developed a tiny difference in a certain type of fat that may be associated with developing a certain type of heart disease…in rabbits.

- Is it possible that the most important questions the study set out to answer couldn’t be answered by the study’s findings? And that what we’re hearing about is the one statistically significant—but inconsequential—thing the study did find? Likewise, many studies show no effects at all, and most often, these studies fail to be published or publicized at all.

So What Are We to Do?

When I used to give talks or write about how to make sure the science information we’re reading is trustworthy, I would advise reading only certain newspapers or magazines or websites, but sooner or later, even the most trustworthy sources mess up. The most prominent newspapers in the US, and the most prestigious medical websites have published some real whoppers in the past couple of years, for example asserting that we can’t trust foods from genetically modified organisms or that maybe we should discount promoting vaccines because some neuro-atypical people find needles scary. Rather than looking for news sources that are always trustworthy, I recommend asking the questions I posed above whenever you hear a report of some scientific finding.

However, there are a small number of websites and individual Twitter sites that are actually dedicated to skeptically evaluating supposed scientific breakthroughs and other misinformation on the media. Feel free to check these out, and I will add to the list from time to time:

McGill University Office for Science and Society (www.mcgill.ca/oss/ Their mission is “Separating Sense from Nonsense.”

Timothy Caulfield is a professor of law and director of the Health Law Institute at the University of Alberta in Canada. You can find his Twitter posts at @CaulfieldTim and he frequently authors or is cited in articles looking at how to identify scientific misinformation in the media. He also hosted a documentary series called, “A User’s Guide to Cheating Death.”